Machine Reading

As Elena Pierazzo states in her article, A Rationale of Digital Documentary Editions, “The process of selection is inevitably an interpretative act: what we choose to represent and what we do not depends either on the particular vision that we have of a particular manuscript or on practical constraints” (Pierazzo 3). Pierazzo’s view of on the process of marking up texts is similar to our first step within the process of a Distant Reading project – asking which general question you would like to research.

In terms of the Digital Humanities, I believe that the term “Machine Reading” is often misleading. The title implies that only a machine is reading the text and there is no human interaction. However, this is completely untrue. As Pierazzo mentions the process of selecting what you want to mark up within a text is an “interpretative act”. Thus, a human must choose which information within the text is essential to the reader.

Stylometry

Using such platforms as Lexos and TEI – oXygen, I have learned a multitude of new ways to approach analyzing my corpora. Platforms within the field of stylometry can be used to conduct macro-level and/or micro-level research. It is important to recognize the ability to use both Lexos and oXygen in tandem. In other words, you can perform both a distant reading with Lexos and a close reading with oXygen. Therefore, you can do differential analyses, synthesizing the various statistics and interpretations from each platform.

Lexos

Lexos is a great platform that has a myriad of options to clean and investigate your corpora. I was able to upload all of my documents to Lexos with no problems. Then, I had the opportunity to “scrub” my documents of stop words or any special characters. Fortunately, I had the stop word list from Jigsaw that I uploaded straight to Lexos. Then, I was able to visualize and analyze the data of my corpora. Under the visualize tab, there is WordCloud, MultiCloud, RollingWindow Graph, and BubbleViz. Some of the options are similar to other web sites, like Wordle. However, under the analyze tab, there is Statistics, Clustering – Hierarchical and K-means, Topword, etc. The most impressive and useful means to analyze my corpus was through the dendrogram and the zeta-test. Additionally, Lexos can cut and segment your texts into smaller documents by characters, words, or lines. This feature is helpful when using some DH platforms, like Jigsaw, that work better with smaller documents.

Investigating in Lexos

When skyping in with Dr. James O’Sullivan, I was able to learn of countless hermeneutical approaches using the features of Lexos and multi-faceted digital platforms. Stylometrics can produce, as Dr. O’Sullivan stated, “statistically significant” information, pending that the researcher is well-acquainted with his or her texts. Stylometrics supports critical interpretations and close readings. For example, Dr. O’Sullivan showed us the author attribution project used to detect J.K. Rowling’s anonymous books. The digital humanists used dendrograms, which measure texts in terms of similarities in vocabulary, to prove that a text must have been written by a certain author due to the overwhelming statistical evidence.

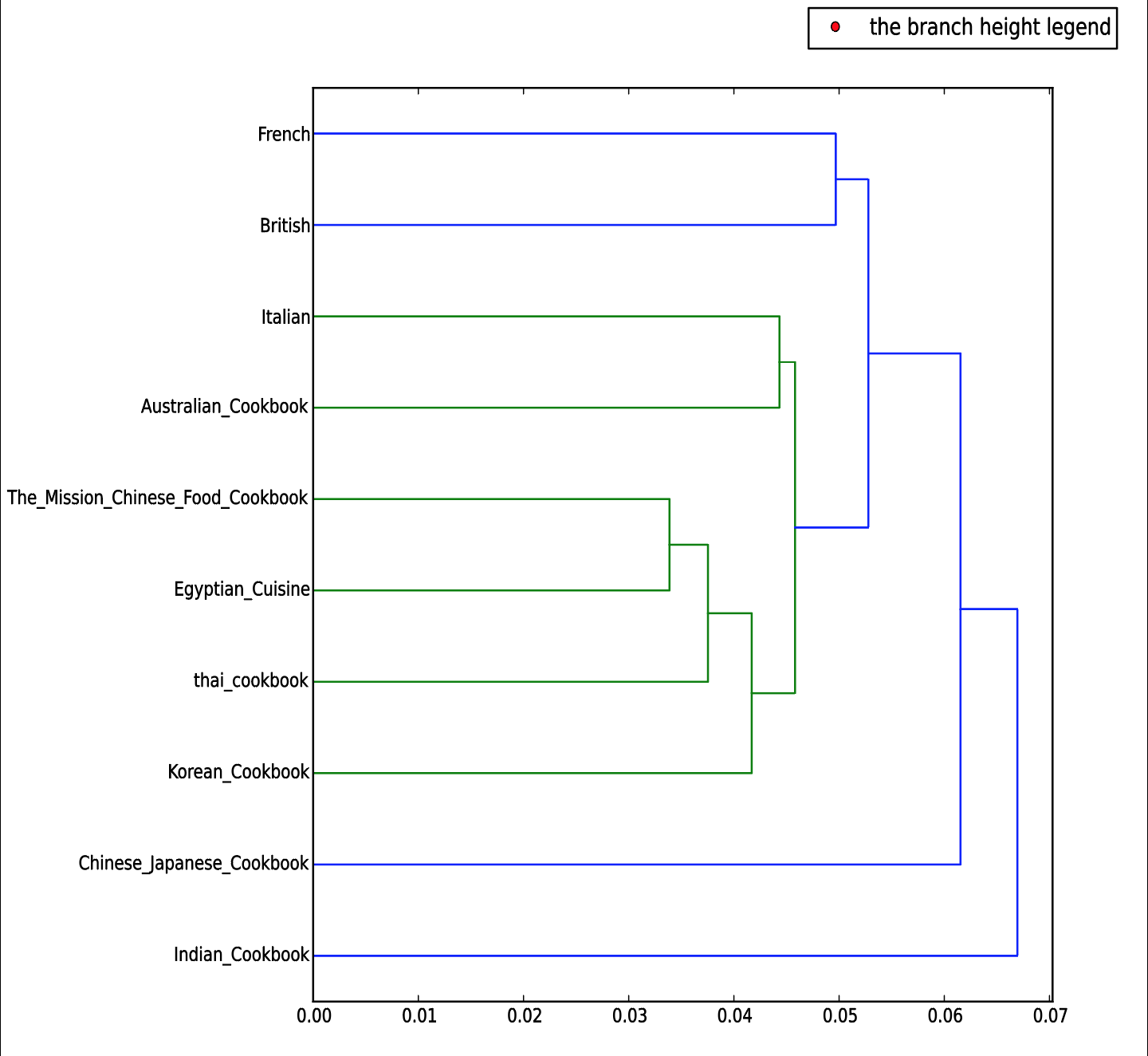

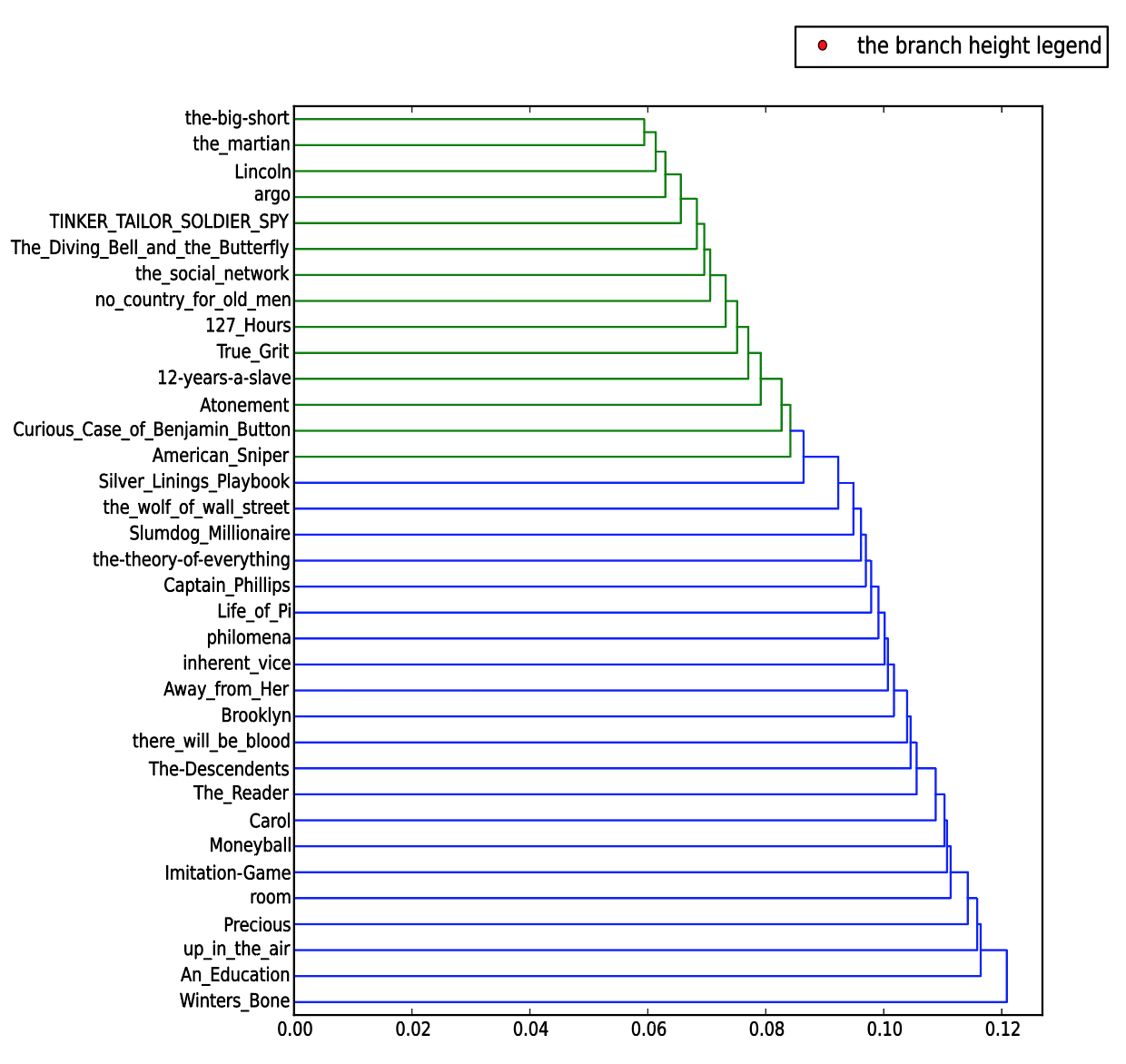

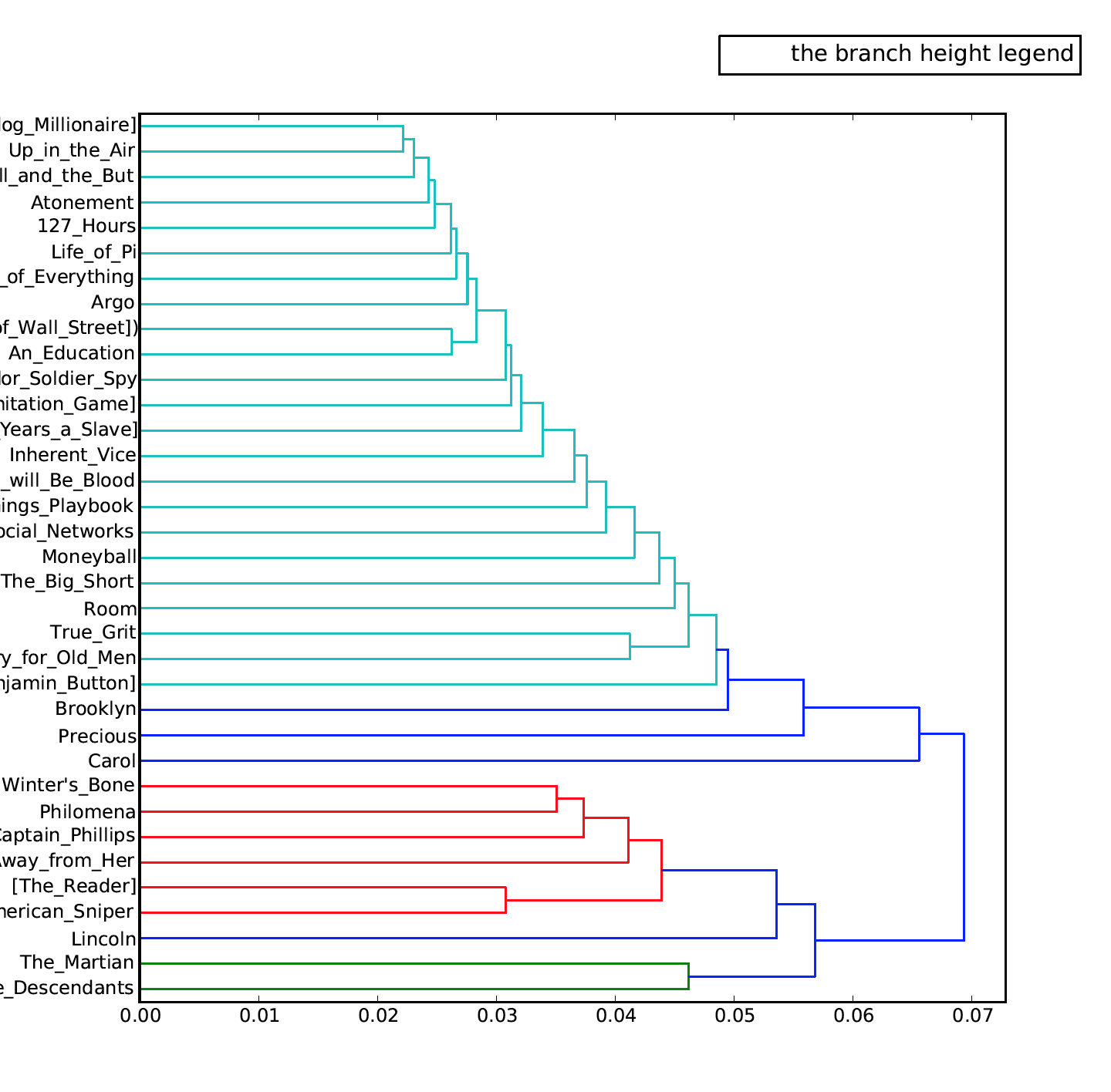

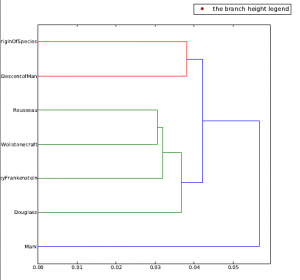

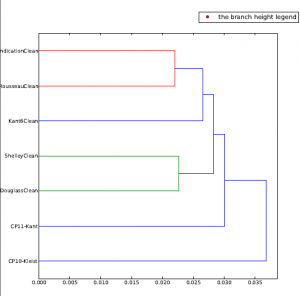

In Lexos, I made many dendrograms that displayed particular peculiarities in my corpora.

Both dendrograms display texts that I have read in HUMN 150 that are considered post-Enlightenment period. Specifically these dendrograms interest me because there is much similarity in the vocabulary throughout all the post-Enlightenment texts, except Marx, Kant, and Kleist. For example, when we read Frederick Douglass’s narrative, there are specific passages that contain lexicon reminiscent of Shelley’s Creature in Frankenstein. Moreover, Shelley and Douglass are shown to have similar vocabulary in both dendrograms. Even more so, Darwin’s On Origin of Species and The Descent of Man are paired together; this example supports the claim that stylometry produces “statistically significant” information.

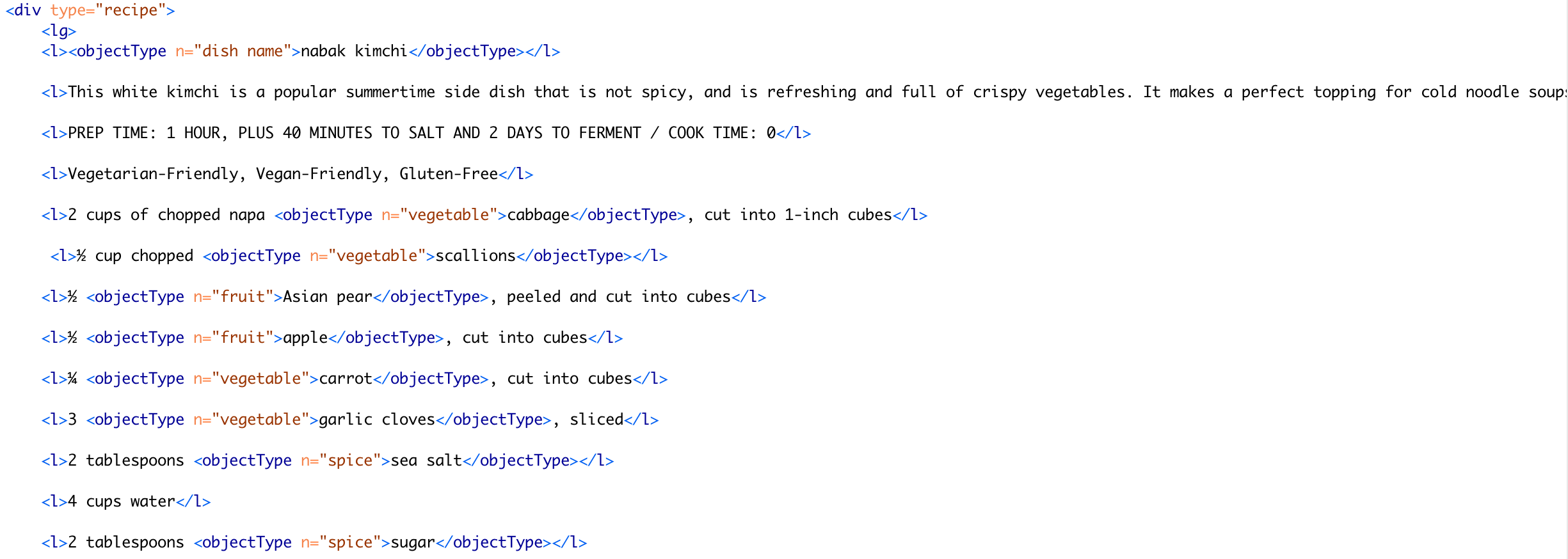

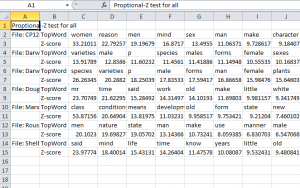

However, I was not completely satisfied with the results from my dendrogram. I wanted to create a zeta test which produced the distinctive words in each text based on the 100 MFW (most frequent words).

As you can see from this zeta test, the first line is Mary Wollstonecraft’s A Vindication of Women’s Rights shows the most unique/distinctive word to be “women” and for Jean-Jacques Rousseau’s Discourse on the Origin of Inequality shows the most unique/distinctive word to be “men.” Rousseau advocated for rights in general for all people during his Discourse. It is in Emile where he makes clear distinctions between men and women. However, the zeta test proves he is clearly still focusing in on men or generalizing the population with a masculine pronoun. (remember this is a translation) Also, these Antconc screenshots show collocations of the terms “women” and “men.” The first is of “women”; the second is “men.” If Rousseau is remaining fairly neutral throughout the Discourse, why does he use the term “men” more than “women”?

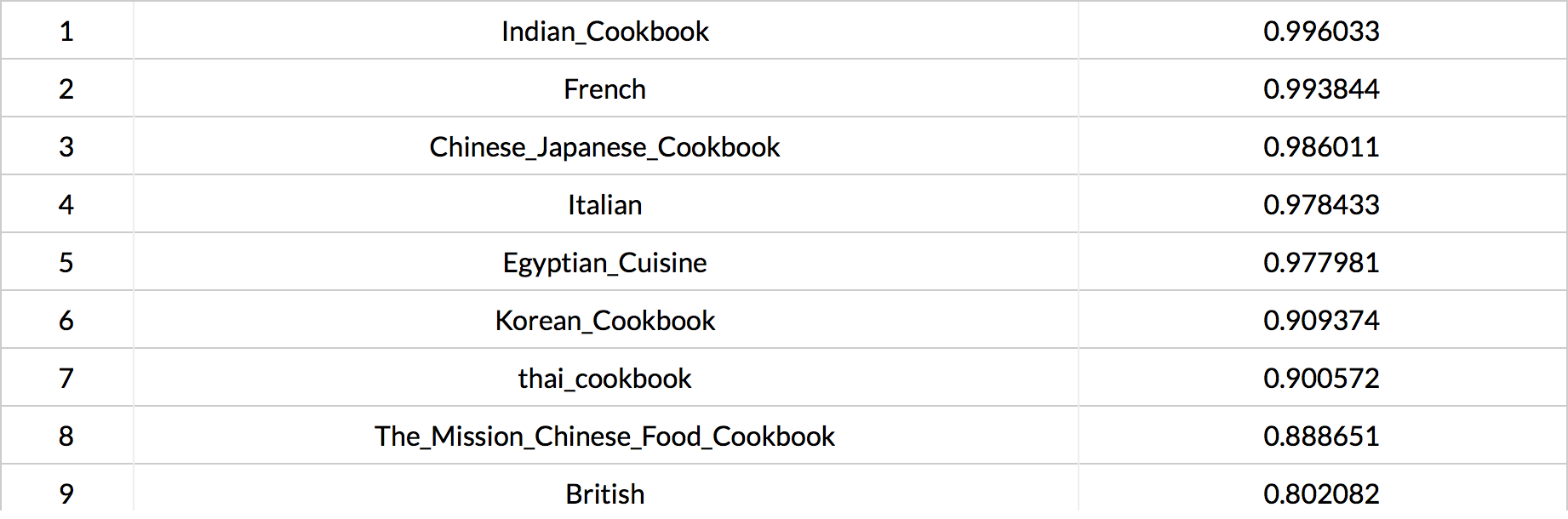

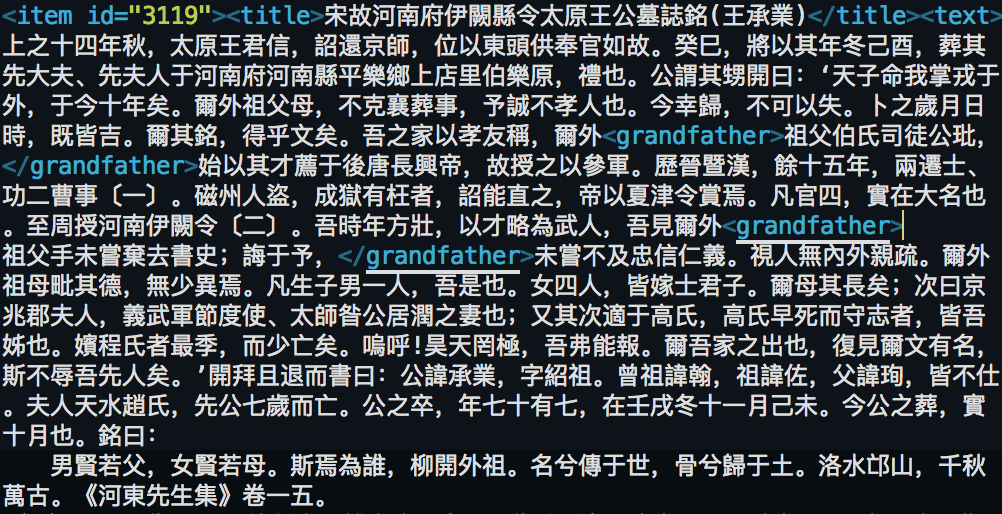

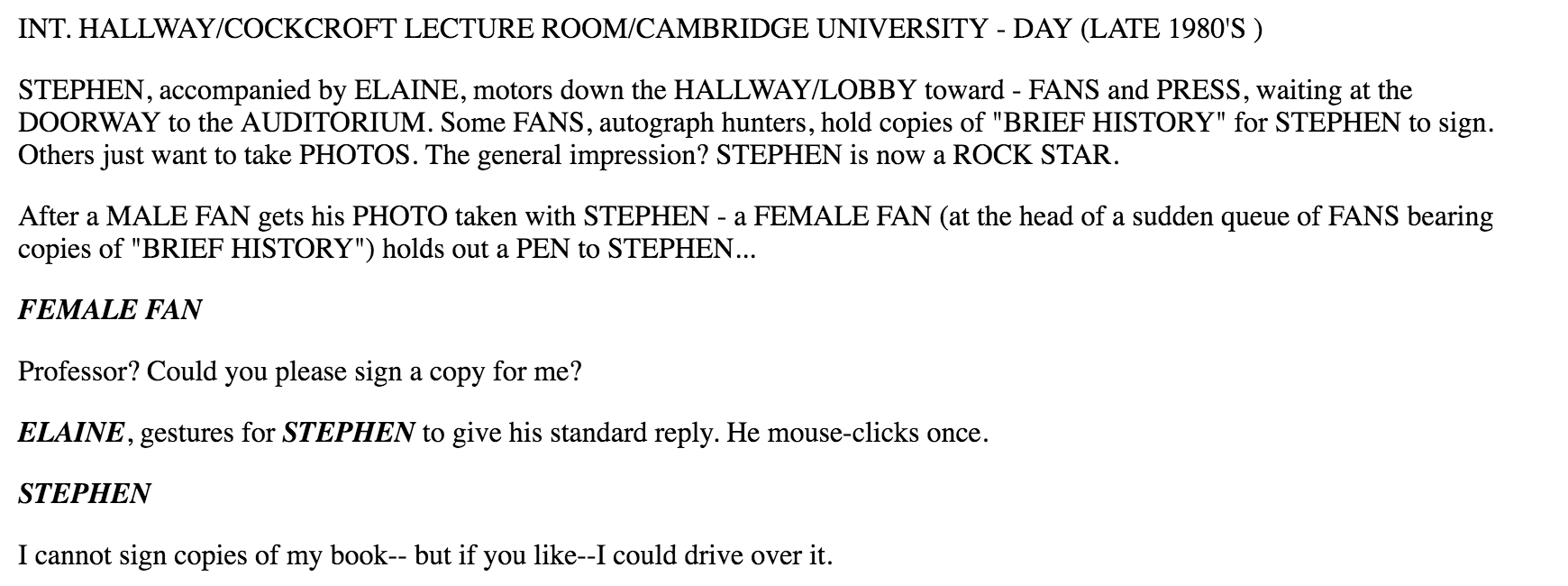

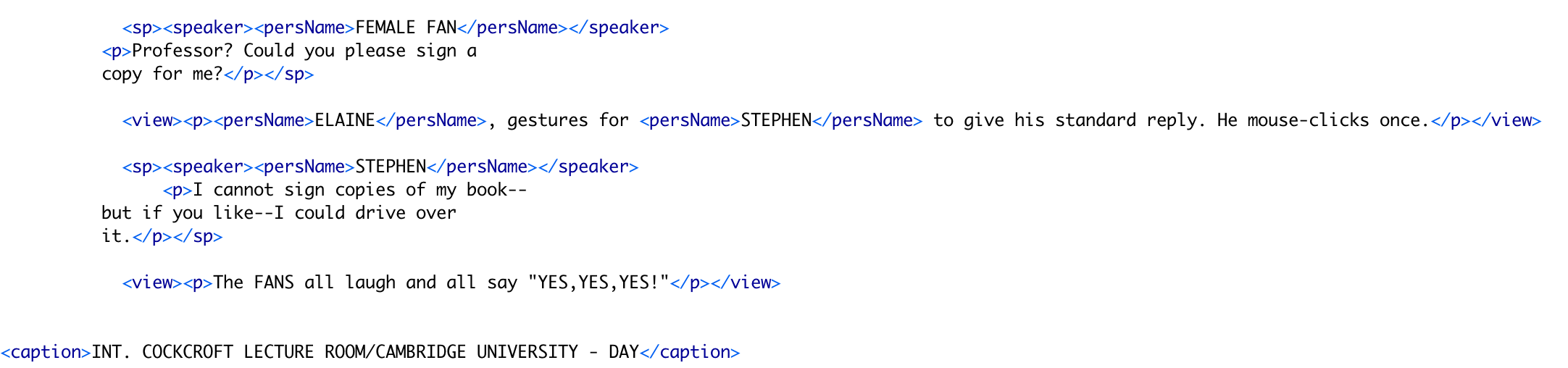

TEI- oXygen Mark-up

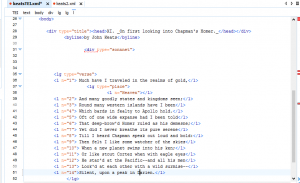

I was able to practice micro-level stylometry through XML. TEI is a fascinating way to mark up your text. For example, I marked up a poem by John Keats, and was able to label certain lexical items depending how the author uses specific terms. Also, since you are the person marking up a text, you are picking out whether terms like “realms of gold” connotes “Heaven” or not. You need to understand the poem; it is much more than the machine.

I have marked up Keat’s poem so that each verse could be shown separately for different annotations and meanings. The biggest problem with marking up texts in a semantic way is time constraints. As Pierazzo states, “Which features of the primary source are we to reproduce in order to be sure that we are following ‘best practice’?” (Pierazzo 4) It is evident that a person could tediously mark up a text for an extended amount of time; however, you must ask yourself, “What is the end result?” and “Have I answered, or helped in answering, my research question?

Pierazzo discusses the ways in which TEI helps to minimize the foreignness of the main text and the author. She states, “It is true that a diplomatic edition is not a surrogate for the manuscript when a facsimile is also present, but it is rather a set of functions and activities to be derived from the manuscript which challenge the editorial work and force a more total engagement of the editor with the source document” (Pierazzo 10).

Final Thoughts

Stylometry on both a macro- and micro-level have given me a new perspective on my corpus. Through TEI, I can markup certain texts and find the semantic meanings of specific passages. It is a way to become more familiar with your texts. Also, the dendrograms and zeta tests fill holes in my research that answer questions like, “Compared to Marx and Rousseau who discusses private property straightforwardly?” It is imperative to have both macro- and micro- analysis to give a differential view of the research results.